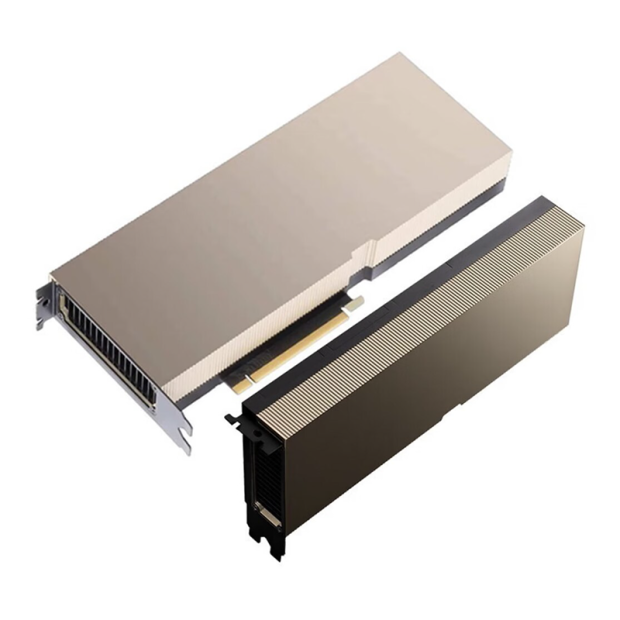

TESLA H800

An Order-of-Magnitude Leap for Accelerated Computing

Tap into unprecedented performance, scalability, and security for every workload with the NVIDIA® H800 Tensor Core GPU. With the NVIDIA NVLink® Switch System, up to 256 H100 GPUs can be connected to accelerate exascale workloads. The GPU also includes a dedicated Transformer Engine to solve trillion-parameter language models. The H800’s combined technology innovations can speed up large language models (LLMs) over the previous generation to deliver industry-leading conversational AI.

- DESCRIPTION

- SPECIFICATION

- BIOS / DRIVERS UPDATE

- REQUIREMENT

►Transformational AI Training

►Real-Time Deep Learning Inference

►Exascale High-Performance Computing

►Accelerated Data Analytics

►Enterprise-Ready Utilization

►Built-In Confidential Computing

►Unparalleled Performance for Large-Scale AI and HPC

|

Peak FP64 |

0.8 TFLOPS |

|

Peak FP64 Tensor Core |

0.8 TFLOPS |

|

Peak FP32 |

51 TFLOPS |

|

Peak TF32 Tensor Core |

756 TFLOPS* |

|

Peak BFLOAT16 Tensor Core |

1513 TFLOPS* |

|

Peak FP16 Tensor Core |

1513 TFLOPS* |

|

Peak FP8 Tensor Core |

3026 TOPSLOPS* |

|

Peak INT8 Tensor Core |

3026 TOPSLOPS* |

|

GPU Memory |

80GB |

|

GPU Memory Bandwidth |

2TB/s |

| Decoders | 7 NVDEC 7 JPEG |

| Max thermal design power (TDP) | 350W |

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each |

| Form factor | PCIe dual-slot air-cooled |

| Interconnect | NVLink: 400GB/s PCIe Gen5: 128GB/s |

| Server options | Partner and NVIDIA-Certified Systems with 1–8 GPUs |