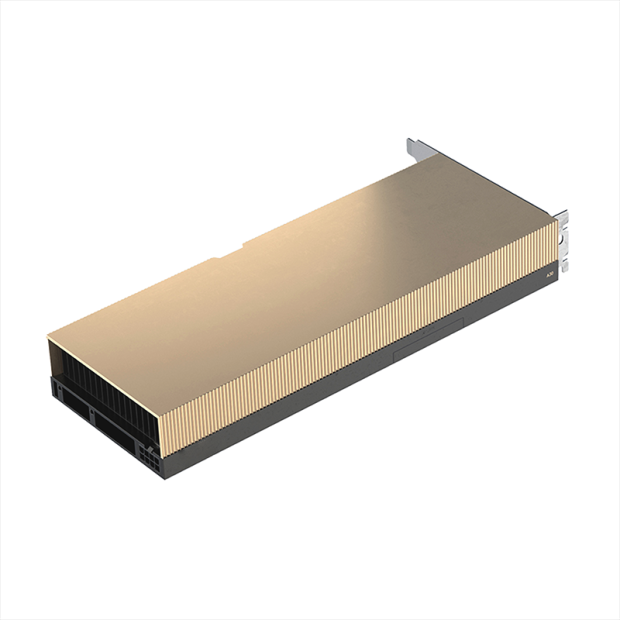

TESLA A30

Bring accelerated performance to every enterprise workload with NVIDIA A30 Tensor Core GPUs. With NVIDIA Ampere architecture Tensor Cores and Multi-Instance GPU (MIG), it delivers speedups securely across diverse workloads, including AI inference at scale and high-performance computing (HPC) applications. By combining fast memory bandwidth and low-power consumption in a PCIe form factor—optimal for mainstream servers—A30 enables an elastic data center and delivers maximum value for enterprises.

HD Gallery

- DESCRIPTION

- SPECIFICATION

- BIOS / DRIVERS UPDATE

- REQUIREMENT

| ►NVIDIA Ampere Architecture CUDA® Cores | ►Third-Generation NVIDIA NVLink® |

| ►Second-Generation RT Cores | ►Virtualization-Ready |

| ►Third-Generation Tensor Cores | ►PCI Express Gen 4 |

| ►48GB of GPU Memory | ►Data Center Efficiency and Security |

|

FP64 |

5.2 teraFLOPS |

|

FP64 Tensor Core |

10.3 teraFLOPS |

|

FP32 |

10.3 teraFLOPS |

|

TF32 Tensor Core |

82 teraFLOPS | 165 teraFLOPS* |

|

BFLOAT16 Tensor Core |

165 teraFLOPS | 330 teraFLOPS* |

|

FP16 Tensor Core |

165 teraFLOPS | 330 teraFLOPS* |

|

INT8 Tensor Core |

330 TOPS | 661 TOPS* |

|

INT4 Tensor Core |

661 TOPS | 1321 TOPS* |

|

Media engines |

1 optical flow accelerator (OFA) 1 JPEG decoder (NVJPEG) 4 video decoders (NVDEC) |

|

GPU memory |

24GB HBM2 |

|

GPU memory bandwidth |

933GB/s |

|

Interconnect |

PCIe Gen4: 64GB/s Third-gen NVLINK: 200GB/s** |

|

Form factors |

Dual-slot, full-height, full-length (FHFL) |

|

Max thermal design power (TDP) |

165W |

|

Multi-Instance GPU (MIG) |

4 GPU instances @ 6GB each 2 GPU instances @ 12GB each 1 GPU instance @ 24GB |

|

Virtual GPU (vGPU) software support |

NVIDIA AI Enterprise for VMware NVIDIA Virtual Compute Server |